Installing Argo CD Instance on vSphere Namespace: Enabling GitOps on VCF 9.0 (Part 02)

In Part 01 of this blog series, we explored how to deploy the Argo CD Operator on the Supervisor Cluster. In this post, we’ll take the next step by creating an Argo CD instance within a vSphere Namespace. This instance will demonstrate how to create and then manage VMware Kubernetes Clusters (VKS) using a GitOps-based approach.

Why Deploy Argo CD via the Operator in VCF 9.0?

Enabling the Argo CD instance through the Argo CD Operator in VCF 9.0 ensures a fully integrated, lifecycle-managed GitOps solution, leveraging the native Supervisor Services framework. The Argo CD Operator simplifies deployment and ongoing management by abstracting complex configurations and ensuring seamless upgrades within the VCF platform.

Running Argo CD as a Supervisor Service provides tighter integration with vSphere Namespaces, enhanced multi-tenancy support, and secure, policy-driven access controls. This operator-based deployment model aligns with VCF 9.0’s declarative infrastructure principles, enabling GitOps capabilities to extend into VKS(VMware Kubernetes Service), vSphere PODS and VM, ensuring the ease of lifecycle management.

Foundational Setup for Enabling Argo CD in a vSphere Namespace

- Supervisor Cluster:- Ensure the vSphere Supervisor Cluster is enabled and in a healthy state.

- Argo CD Operator:- The Argo CD Operator must be installed and enabled on the Supervisor Cluster

- vSphere Namespace:- A vSphere Namespace must be created on the Cluster, and appropriate resource limits, VM classes, and storage policies assigned.

- CLI:- Ensure the Argo CD CLI and the kubectl / VCF are installed on the bootstrap node.. We can download the CLI from the Broadcom support portal

Deploying & Accessing an Argo CD instance in a vSphere Namespace

When deploying Argo CD in a vSphere Namespace, the platform offers flexibility to run it in either High Availability (HA) or non-HA mode. This behaviour is controlled by the replica count defined for the core Argo CD components, such as the application controller, repo server, and API server. By default, Argo CD is deployed in a non-HA configuration with a single replica of each component, suitable for development or low-scale environments. For production-grade or mission-critical use cases, increasing the replica count enables HA mode, ensuring redundancy, scalability, and resilience across multiple pods. Also, we can customise the resources and limits of each argocd service pod.

Sample (Non-HA) Argo CD instance (argocd-instance.yaml)

apiVersion: argocd-service.vsphere.vmware.com/v1alpha1

kind: ArgoCD

metadata:

name: <argocd-instance-name>

namespace: <vsphere-namedpace>

spec:

version: <2.14.13+vmware.1-vks.1> #kubectl explain argocd.spec.version

url: https://<argocdapps-name>.<domain-name>

applicationSet:

enabled: true # this is an optional service

replicas: 1

controller:

replicas: 1

enableLoadBalancer: true # L4 IP will be assigned for UI access

notification:

enabled: true # this is an optional service

replicas: 1

repo:

replicas: 1

server:

replicas: 1- Log in to the supervisor and execute the argocd-instance.yaml file

# kubectl vsphere login --server <supervisor-ip> --vsphere-username <username> --insecure-skip-tls-verify

Password:

Logged in successfully.

You have access to the following contexts:

carbon

svc-argocd-service-domain-c10

svc-cci-ns-domain-c10

svc-harbor-domain-c10

svc-tkg-domain-c10

svc-velero-domain-c10

**** Supervisor Context verfication ****

# kubectl config get-contexts

# kubectl apply -f argocd-instance.yaml

argocd.argocd-service.vsphere.vmware.com/argocd-carbon created

# kubectl get pods -n <namespace> # checking the POD status

NAME READY STATUS RESTARTS AGE

argocd-application-controller-0 1/1 Running 0 3m23s

argocd-applicationset-controller-667b9b4696-qh6zw 1/1 Running 0 3m22s

argocd-notifications-controller-678dc5f47d-6wnvk 1/1 Running 0 3m22s

argocd-redis-8bfc9d85f-8wm2x 1/1 Running 0 3m22s

argocd-redis-secret-init-xlq89 0/1 Completed 0 3m55s

argocd-repo-server-76cbcc8574-fx6r9 1/1 Running 0 3m22s

argocd-server-7659587546-lzsvx 1/1 Running 0 3m22s- Accessing the Argo CD Instance via CLI

***** Execute the command to get the external IP to access Argo CD instance *****

# kubectl get svc -n carbon -o jsonpath='{range .items[?(@.status.loadBalancer.ingress)]}{.metadata.name}{"\t"}{.status.loadBalancer.ingress[0].ip}{"\n"}{end}'

argocd-server <xx.xx.xx.xx>

**** Getting the Initial Admin Password to login ********

# kubectl get secret -n <namespace> argocd-initial-admin-secret -o jsonpath='{.data.password}' | base64 -d ; echo

<initial-login-password>

**** Login to the Argo CD instance via the CLI ****

# argocd login <argocd-instance-ip> --insecure

Username: admin

Password:

'admin:login' logged in successfully

Context 'xx.xx.xx.xx' updated

**** Updating the Default Password for "admin" account *******

# argocd account update-password

*** Enter password of currently logged in user (admin):

*** Enter new password for user admin:

*** Confirm new password for user admin:

Password updated

Context 'xx.xx.xx.xx' updated

***** password verification after updating *****

# argocd login <xx.xx.xx.xx> --insecure

Username: admin

Password:

'admin:login' logged in successfully

Context 'xx.xx.xx.xx' updated

*** Login is Successful from the CLI, and password change is also successful ***

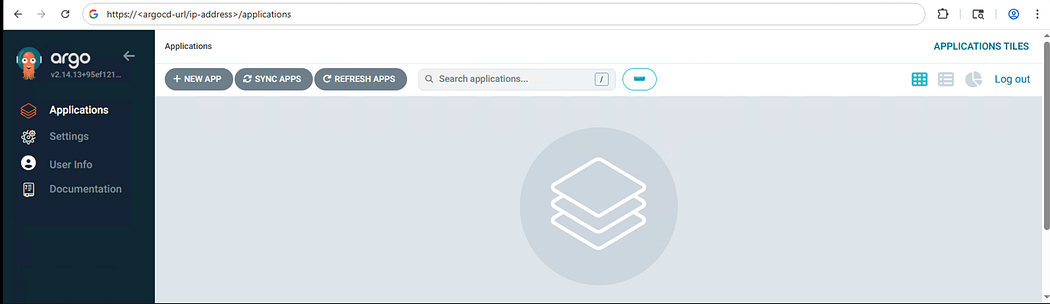

- Accessing the Argo CD Instance via UI

Conclusion

In this blog, we demonstrated how to deploy an Argo CD instance within a vSphere Namespace on a VMware Kubernetes Cluster (VKS), laying the foundation for GitOps-driven cluster and application management. With the Argo CD Operator enabled and the instance successfully deployed, we are now equipped to manage Kubernetes resources declaratively and at scale within the private cloud.

In the next part of this series, we will take it a step further by showcasing how to automate the creation of new VKS clusters using GitOps workflows and managing the clusters, bringing consistency, repeatability, and full lifecycle control to your infrastructure.

Comments

Post a Comment